Top 5 Vector Databases for Chatbot Applications: Features and Analysis

November 18th, 2023

Top 5 Vector Databases for Chatbot Applications: Features and Analysis

Top 5 Vector Databases for Chatbot Applications: Features and Analysis

Chatbots have become increasingly sophisticated with advancements in artificial intelligence, particularly through the application of vector databases. These databases represent an evolution from traditional models, allowing for more nuanced and human-like interactions by understanding the context and semantics behind user queries. The rise of vector databases is closely tied to their ability to process high-dimensional data, significantly enhancing the capabilities of chatbot applications across various industries.

The integration of vector databases into chatbots has ushered in a new era where conversational AI can execute more accurate and efficient semantic searches, leading to improved customer experience and satisfaction. The importance of selecting the right vector database for a chatbot application cannot be overstated; it's a decision that impacts not only the effectiveness of the AI but also the scalability and maintainability of the system itself.

As businesses seek to provide better service and streamline operations, the use of vector databases in powering chatbot functionalities gains prominence. With an array of options available, each offering unique features, performance levels, and complexities, choosing the most appropriate solution requires a comprehensive understanding of their respective advantages and limitations. ![creata ai vector db] (https://images.datacamp.com/image/upload/v1694511370/image11_1d8796f2bb.png)

Understanding vector databases in chatbot technology

The role of vector databases in improving chatbot interactions

Vector databases have become a crucial component in the field of artificial intelligence, particularly for enhancing the performance of chatbots. By utilizing advanced techniques such as semantic search and contextual understanding, vector databases contribute significantly to creating more dynamic and human-like interactions between users and chatbots.

Semantic search is an essential feature of vector databases that allows chatbots to interpret and understand user queries beyond simple keyword matching. Unlike traditional search methods, semantic search considers the intent and contextual meaning behind a query. For instance, if a user asks a chatbot, "What's the forecast today?" instead of searching for the terms 'forecast' and 'today' individually, the chatbot can comprehend the request's overall meaning and provide a weather update. This level of comprehension is achieved by converting text into mathematical vectors which represent the semantic relationships between words and phrases, enabling the chatbot to match queries with relevant information more accurately.

Contextual understanding further enhances a chatbot's ability to interact naturally. A vector database maintains the context of a conversation, which means that it remembers the flow of the dialogue just like a human would. For example, after providing the weather update, if the user then asks, "And tomorrow?", the chatbot understands that the question relates to the weather without needing to mention it explicitly again. This ongoing thread of conversation makes the interaction seamless and intuitive.

User interaction is ultimately what determines the success of a chatbot. Vector databases support this by ensuring that each user's experience is personalized and relevant. Chatbots can analyze past interactions stored as vectors to tailor responses and recommendations based on individual preferences and behaviors. Say a user often asks about sports news; the chatbot can prioritize such topics in future conversations, thus improving user satisfaction.

Overall, vector databases are transforming how chatbots communicate, making them more intelligent, responsive, and attuned to the nuances of human language. This results in a smoother user experience and paves the way for more sophisticated applications of AI in everyday technology.

Comparison with traditional databases

Vector databases represent an innovative approach to managing data, uniquely suited for handling the complexities of AI applications like chatbots. In contrast, traditional databases have been the backbone of data storage and retrieval for decades. The core difference between traditional and vector databases lies in their handling of structured versus unstructured data.

Traditional databases are designed to store and manage structured data—that is, data that adheres to a predefined schema or format. Think of a spreadsheet where each column represents a different piece of information, such as name, address, and phone number. For example, relational databases, like MySQL, use tables with fixed columns and rows to organize this type of data efficiently. They excel at transaction processing and are ideal for applications where data integrity and consistency are paramount, such as banking systems.

However, when it comes to unstructured data—data that doesn't follow a specific format or structure—traditional databases struggle. Unstructured data includes text, images, audio, and video, which are common elements in chatbot interactions. Processing natural language, for instance, can be challenging because human speech doesn't conform to rigid schemas and often involves nuances and variations.

This is where vector databases come into play. Unlike traditional databases, vector databases are built to handle unstructured data seamlessly. They work by converting data like text or images into vectors—essentially, numerical representations in a multi-dimensional space. By doing so, they enable complex operations such as similarity search, where you can find items most similar to a given query based on their vector representations.

To illustrate, consider a chatbot that needs to understand user queries and provide relevant responses. With a vector database, when a user types "What's the weather like today?" the chatbot can analyze the sentence's vector representation and compare it against a database of possible questions and answers to find the closest match. This process allows for more accurate and context-aware responses compared to the exact-match or pattern-based methods used in traditional databases.

Given these capabilities, vector databases can significantly enhance the functionality of chatbots, offering a level of understanding and interaction that is much closer to human-like conversation. However, one must weigh these benefits against potential downsides, such as the need for more specialized knowledge to implement and maintain vector databases effectively. Additionally, because they're relatively new, they might lack some of the robustness and proven scalability present in traditional databases.

In summary, while traditional databases offer reliability and structured data management, vector databases pave the way for advanced AI-driven applications by adeptly processing unstructured data. As chatbot technology continues to evolve, the choice between traditional and vector databases will hinge on the specific requirements of the application and the desired level of user interaction.

Milvus

How Milvus powers chatbot efficiency

To understand the pivotal role Milvus plays in enhancing chatbot efficiency, it's essential to dive into the features that make it a standout option in vector database management. Chatbots powered by AI and machine learning need to process information swiftly and accurately to maintain the flow of conversation with users. Here's how Milvus addresses some of these critical areas:

Real-time Processing

Milvus excels at handling real-time data processing demands, which is crucial for chatbots that must respond promptly to user queries. It achieves this through its highly efficient indexing and retrieval system, designed to work with vast volumes of data without performance degradation. For instance, when a user types a message, Milvus can quickly sift through the indexed vectors - which represent text in numerical form - to find the most relevant responses or information.

Similarity Search

One of the core functionalities of Milvus is its ability to perform similarity searches. This means that when a chatbot receives a question or statement from a user, Milvus compares the semantic meaning encoded in the query vector against a database of pre-existing vectors. It then retrieves the closest matches based on their cosine similarity or Euclidean distance. This process ensures that even if the user's input isn't an exact match to stored data, the chatbot can still provide a response that best aligns with the user's intent.

Let's say a user asks, "How do I reset my password?" Milvus will compare this question to similar questions it has seen before, like "What's the way to change my password?" Even though the wording is different, the intent is the same, and Milvus helps the chatbot recognize this.

Vector Indexing

The efficiency of a chatbot also hinges on how quickly it can access the right information. Milvus employs advanced vector indexing techniques such as quantization and tree-based indexes to organize data so that it's readily accessible. By breaking down high-dimensional vectors into a structured index, Milvus reduces search times significantly. Consequently, when a user interacts with a chatbot, the response feels instantaneous because Milvus has streamlined the path to finding the most appropriate data points within the database.

For a practical example, consider a chatbot for online shopping assistance. When a customer asks for product recommendations, Milvus's vector indexing allows the chatbot to pull up products with attributes most similar to the customer's preferences in mere milliseconds.

By incorporating these technologies, Milvus empowers chatbots to meet and exceed user expectations in delivering timely and contextually relevant interactions, laying the groundwork for more natural and engaging conversational experiences.

Pros and cons of using Milvus

Milvus is an open-source vector database designed to handle the complexities of artificial intelligence and machine learning workloads, including those required for chatbot applications. It's pivotal to weigh both the benefits and challenges when considering Milvus for your project needs.

Advantages of Milvus

One of the primary advantages of using Milvus is its scalability. Thanks to its distributed architecture, it can scale out to accommodate growing data volumes without a decrease in performance. This makes it ideal for businesses that anticipate a need to scale their chatbot operations up or down.

Another significant benefit is its flexibility. Milvus supports multiple similarity metrics which are crucial for chatbot applications as they allow bots to understand and respond to a variety of user queries more accurately. Additionally, it can easily integrate with popular machine learning frameworks like TensorFlow and PyTorch, simplifying the development process.

Milvus provides efficient real-time vector searches, enabling chatbots to retrieve the most relevant responses quickly. This significantly enhances the user experience by reducing wait times for answers to queries.

The system's high availability is another merit. With features such as data replication and automatic failover, Milvus ensures that chatbot services remain uninterrupted even in the event of hardware failure.

Lastly, being open-source means users benefit from continuous community-driven improvements and the option to contribute to the development of the database themselves.

Limitations

Despite its strengths, Milvus has some limitations. It requires a certain level of technical expertise to deploy and maintain effectively. Smaller teams or those without dedicated IT support might find this challenging.

Another limitation is related to resource consumption. Because Milvus is built to handle large-scale vector data, it can be resource-intensive, necessitating robust hardware for optimal performance. For organizations with limited computing resources, this could pose a problem.

Use Case Scenarios

Milvus shines in various use case scenarios. In e-commerce, chatbots powered by Milvus can efficiently handle customer service by quickly pulling up customer data, order history, and tracking information to answer inquiries.

In healthcare, a Milvus-powered chatbot can aid patients by answering frequently asked questions, scheduling appointments, and even providing preliminary diagnoses based on symptoms described in natural language.

For content discovery platforms like news aggregators or streaming services, chatbots with Milvus under the hood can offer personalized recommendations by understanding user preferences and matching them to a vast database of content vectors.

While Milvus is a powerful tool for enhancing chatbot applications, it's essential to consider these pros and cons carefully to determine if it aligns with your specific use case and organizational capabilities.

Weaviate

Enhancing chatbot AI with Weaviate features

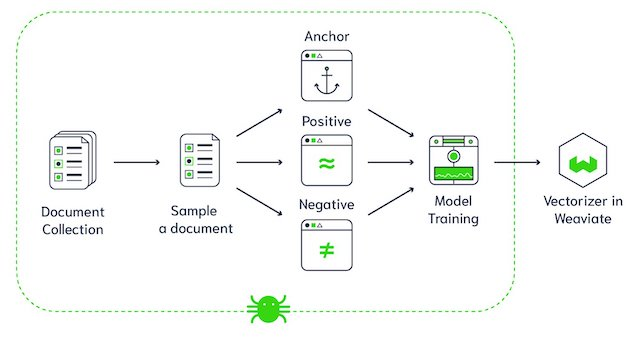

Weaviate takes chatbot intelligence to the next level by incorporating advanced AI features that enable more natural and effective user interactions. One of these features is the 'contextionary', which serves as a context-aware dictionary for the AI, providing it with an understanding of words based on their usage and meanings in different contexts. This semantic knowledge base allows Weaviate to interpret user queries more accurately, leading to responses that are not just keyword-based but also cognizant of the intent behind the words.

For instance, if a user asks a chatbot about "apple," the contextionary can help determine whether they're referring to the fruit or the tech company, based on additional words or previous interactions. This depth of understanding significantly enhances the chatbot's ability to engage in meaningful conversations.

The power of Weaviate is further amplified by its vector search capabilities. Chatbots powered by Weaviate convert text input into high-dimensional vectors—essentially numerical representations that capture the context of words and phrases. When a user inputs a question or statement, the chatbot uses vector search to find the most semantically similar vectors among the stored data. This method ensures that the chatbot's response is drawn from the most relevant information available, whether that be a pre-programmed answer or content it has learned over time.

To illustrate, imagine a user typing "I need tips for indoor gardening" to a chatbot. Weaviate will transform this query into a vector and compare it to existing vectors in its database. It'll then provide the user with the best available resources or advice on indoor gardening, drawing upon the closest match in meaning rather than relying solely on keyword overlap.

Lastly, Weaviate's schema-based data model establishes a clear structure for the information that the chatbot can learn and retrieve. This data model defines types of concepts and relationships that exist within the chatbot's knowledge base, making it easier to categorize and access information efficiently. For example, in a schema-based data model for an e-commerce chatbot, product entities could have attributes such as price, category, and reviews, while also being linked to related entities like customers and transactions through defined relationships.

Creating a schema in Weaviate involves defining classes and properties that represent the knowledge domains your chatbot will handle. A tutorial would typically involve these steps:

- Identify the main entities, such as "Product," "Customer," or "Order," that your chatbot needs to know about.

- Define properties for each entity. For example:

- Product might have "name," "price," and "description."

- Customer could include "name," "contact info," and "purchase history."

- Specify relationships between entities. You might indicate that a "Customer" places an "Order" and an "Order" contains multiple "Products."

- Implement this schema using Weaviate's APIs or configuration tools to guide the chatbot in structuring and retrieving data.

By bringing all these features together, Weaviate offers a robust foundation for creating chatbots that not only understand and respond to user queries more effectively but also continue to learn and adapt over time.

Faiss

Faiss's impact on large-scale chatbot applications

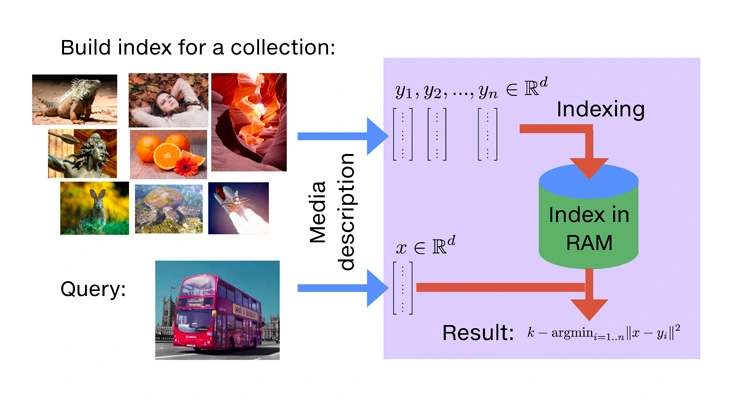

When dealing with extensive chatbot interactions, databases need to handle a vast amount of data efficiently. Faiss, developed by Facebook AI Research, has been engineered specifically for large-scale vector search and has proven to be highly beneficial for chatbots that must process and understand large datasets.

Faiss excels in large dataset handling. It is optimized for both speed and accuracy when searching through millions or even billions of vectors. For a chatbot application, this means faster response times and more relevant answers to user queries, even when the underlying data is massive. This performance is achieved through advanced indexing strategies that Faiss employs, which compress and organize the vectors in a way that speeds up the search without significant loss of precision.

Another feature that sets Faiss apart is its capability for efficient clustering. Clustering refers to the grouping of similar vectors together. In a chatbot context, clustering allows the system to categorize similar questions or phrases, making it easier to identify the intent behind a user's message. By using Faiss to cluster chat data, developers can train their chatbot to provide more accurate responses based on the clusters identified.

Furthermore, Faiss supports batched search, which means that instead of processing one query at a time, it can handle several queries simultaneously. This batch processing can significantly speed up the operation since multiple user inputs can be compared against the database in one go. This is particularly useful for chatbots during peak times when there may be a surge in user interaction.

Tutorial: Implementing Basic Search with Faiss

Here is a simple example to illustrate how to use Faiss for vector search within a chatbot application:

Install Faiss: First, ensure you have Python installed, then install Faiss via pip: ```sh pip install faiss-cpu # for CPU

or

pip install faiss-gpu # for GPU ```

Create an Index: You'll need an index to store your vectors; here's how to create one: ```python import faiss import numpy as np

d = 64 # dimension of vectors nb = 100000 # number of vectors to index np.random.seed(1234) # make reproducible xb = np.random.random((nb, d)).astype('float32') xb[:, 0] += np.arange(nb) / 1000.

index = faiss.IndexFlatL2(d) # build a flat (L2) index print(index.is_trained) index.add(xb) # add vectors to the index print(index.ntotal) ```

Batched Search: To perform a batched search through your index: ```python nq = 10 # number of queries np.random.seed(1234) xq = np.random.random((nq, d)).astype('float32') xq[:, 0] += np.arange(nq) / 1000.

k = 4 # return 4 nearest neighbors distances, indices = index.search(xq, k) # actual search

print(indices) # neighbors of the 5 first queries print(distances) # distances of the neighbors ```

This straightforward tutorial shows the ease with which Faiss can be integrated into chatbot applications to handle large datasets, apply clustering, and execute batch searches, thus boosting the overall efficiency and user experience of the chatbot.

Examining the strengths and weaknesses of Faiss

Faiss, developed by Facebook's AI Research team, is a library specifically designed to provide efficient similarity search and clustering of dense vectors. It has become an essential tool for many applications that require searching through high-dimensional spaces, such as chatbot applications that rely on understanding and processing human language at scale. Here, we delve into the benefits and limitations of using Faiss in your projects.

Positive Aspects of Faiss

One of the most significant advantages of Faiss is its exceptional speed when it comes to searching through large datasets. Faiss efficiently handles billions of vectors, making it ideal for industries where real-time search capabilities are paramount. It employs state-of-the-art algorithms optimized for both accuracy and performance, ensuring that chatbots can quickly find the most relevant responses or information.

Another positive aspect is Faiss's ability to perform clustering of vectors, which can be handy for organizing data or pre-processing steps in chatbot applications. Clustering can help categorize user inputs, group similar queries, or even assist in intent recognition—a core component of any conversational AI system.

Furthermore, Faiss is highly scalable; it can run on CPUs and GPUs, allowing developers to leverage their existing hardware infrastructure effectively. With GPU support, Faiss can capitalize on parallel processing to further enhance its speed and efficiency.

Negative Aspects

However, Faiss does have its drawbacks. One notable issue is the steep learning curve, especially for those not familiar with vector search principles or high-dimensional data handling. The comprehensive nature of the library means there are numerous parameters and configurations that can be daunting to beginners.

From a practical standpoint, Faiss requires careful management of resources. When deployed in large-scale environments or with extensive datasets, it can consume substantial memory and computing power. This technical demand may necessitate more robust hardware, leading to increased operational costs.

Despite these challenges, Faiss’s documentation and community support do offer guidance to overcome some of these hurdles. New users are encouraged to explore the tutorials provided in the Faiss GitHub repository to get a better grasp on how to integrate it into their systems.

Technical Requirements

To use Faiss effectively, several technical requirements must be met. Firstly, Faiss is compatible with Linux, macOS, and Windows, but it is optimized for Unix-based systems, which should be taken into account when planning the deployment environment.

Installation typically involves Python, as Faiss has a PyPI package available. It's also important to have a compatible version of NumPy since Faiss interfaces with Python primarily through this library. For those looking to exploit GPU acceleration, a CUDA-compatible graphics card and the corresponding toolkit must be installed, which also entails managing the correct driver versions.

For instance, a developer looking to implement Faiss for a chatbot application might follow these simplified steps:

Ensure that Python and NumPy are installed:

shell pip install numpyInstall the Faiss CPU-only version via pip:

shell pip install faiss-cpuIf GPU support is desired and the necessary CUDA environment is set up, the GPU version can be installed instead:

shell pip install faiss-gpuOnce installed, developers can utilize Faiss's indexing capabilities to store and search through vectors representing user queries, providing the backbone for a chatbot's understanding and matching abilities.

In summary, while Faiss presents certain complexities, its benefits—in speed, efficiency, and scalability—make it a powerful choice for enhancing the capabilities of chatbot applications. With proper setup and attention to technical prerequisites, Faiss can significantly improve the responsiveness and accuracy of conversational AI systems.

Pinecone

How Pinecone simplifies chatbot vector searches

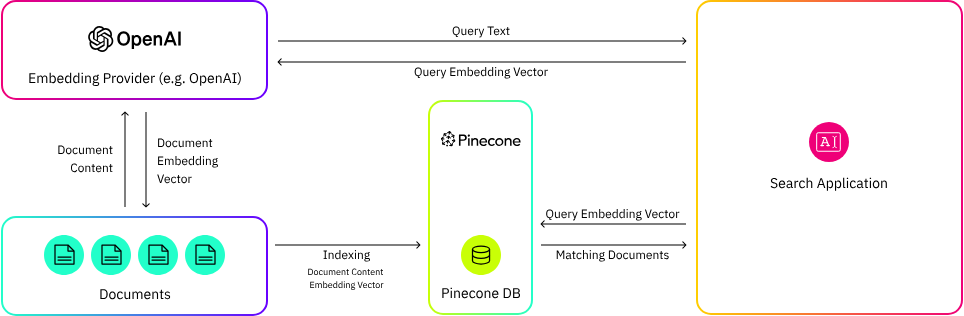

In the realm of chatbot technology, the ability to accurately comprehend and respond to user inquiries is paramount. Pinecone, a vector search service, addresses this need by streamlining how chatbots locate and retrieve the most relevant information through its SaaS (Software as a Service) platform.

Pinecone operates as a fully-managed SaaS, meaning that users don't have to worry about the complexities of infrastructure management. This is particularly advantageous for businesses without extensive technical resources or those looking to deploy sophisticated chatbot solutions quickly. As a cloud-based service, Pinecone allows developers to focus on building and improving their chatbots instead of managing servers and databases.

One of the key tasks in enabling a chatbot to understand and process natural language is indexing vectors. Vectors are mathematical representations of text that machines can efficiently analyze. When a user interacts with a chatbot, their input — be it text or voice — is converted into vectors. Pinecone excels at indexing these vectors, making it easy for chatbots to match queries with the correct responses or information. Here’s a simple example of how vector indexing with Pinecone might work:

- First, the chatbot processes user input to convert text into a vector using a pre-trained machine learning model.

- Once the input is vectorized, Pinecone's indexing system quickly searches through its database of pre-indexed vectors to find the closest matches.

- The chatbot then retrieves the most relevant response based on the vector similarities.

The importance of metadata handling shouldn't be underestimated either. Metadata refers to data about the data — such as timestamps, user IDs, or categories. With Pinecone, you can attach metadata to each vector indexed. This feature becomes invaluable when you want your chatbot to consider context, user history, or specific attributes while responding. For instance, if you run an e-commerce store, metadata can help your chatbot remember previous interactions with a customer, including viewed products or past purchases, allowing for more personalized recommendations.

To get started with Pinecone for your chatbot application, follow these steps:

- Sign up for an account with Pinecone and create a new index.

- Integrate your chatbot application with Pinecone using the provided SDKs and APIs.

- Index your existing FAQ or knowledge base articles in Pinecone, converting them into vectors along with relevant metadata.

- Configure your chatbot to send user queries to Pinecone and receive the most applicable answers based on vector similarity.

By leveraging Pinecone's SaaS solution, companies can enhance the capabilities of their chatbots, making them more efficient in understanding and interacting with users. The simplicity of setting up and maintaining Pinecone, combined with powerful features like vector indexing and metadata handling, positions it as an attractive choice for businesses looking to elevate their chatbot experiences.

Analyzing Pinecone's advantages and potential issues

Pinecone is a managed vector database service that's designed to streamline the integration of vector search into various applications, including chatbots. It's gaining recognition for its ability to handle complex queries with speed and precision. When considering Pinecone as the backbone for your chatbot application, it's essential to weigh its benefits against any potential challenges you may face.

Benefits of Pinecone

One of the most significant advantages of using Pinecone is its "simplicity." As a fully-managed service, Pinecone takes care of the underlying infrastructure, allowing developers to focus on building and refining their chatbot's capabilities rather than managing database operations. This simplicity extends to scaling; as your chatbot's user base grows, Pinecone can scale to meet increased demands without manual intervention.

Another benefit is "performance." Pinecone offers low-latency responses to vector similarity searches, which is crucial for providing fast and relevant answers in a chatbot context. This performance is maintained even as datasets grow, ensuring that the user experience remains consistent.

Additionally, Pinecone supports "upserts" and "deletes," enabling real-time updates to the vector index. This feature ensures that your chatbot can quickly adapt to new information or changes in user preferences, making it more dynamic and intelligent over time.

Challenges

However, there are some challenges you might encounter when implementing Pinecone. The first challenge is the "learning curve." While Pinecone itself is designed to be straightforward, understanding how to effectively utilize vector databases for chatbots may require some upskilling for those accustomed to traditional relational databases.

The second challenge is "integration." Depending on your existing technology stack, integrating Pinecone might need additional customization. This process can sometimes be complex, so it's important to have a solid strategy and possibly seek support from Pinecone's technical team.

Finally, we have "dependency." By opting for a managed service, you rely on Pinecone's continuous operation and updates. It's vital to consider how this dependency aligns with your chatbot's availability requirements and how you would manage any potential service disruptions.

Cost Analysis

When it comes to cost, Pinecone operates on a usage-based pricing model. This means you only pay for what you use, which can be cost-effective for applications with fluctuating workloads. However, if your chatbot application consistently processes large volumes of data, costs can accumulate quickly. Therefore, it's important to perform a thorough cost analysis based on your projected usage.

To help with this, you can use Pinecone's pricing calculator available on their website. Simply input your expected number of vector queries, the size of your dataset, and other relevant details to get an estimate of the monthly charges. This exercise will give you a clearer picture of whether Pinecone fits your budget and how it compares to maintaining your own vector database infrastructure.

In conclusion, Pinecone presents a powerful option for developers looking to incorporate sophisticated search functionality into their chatbot applications. By understanding its benefits and anticipating potential challenges, you can make an informed decision about whether it's the right tool for your needs.

Vald

Leveraging Vald for resilient chatbot services

Vald is a highly resilient vector database that offers robust features to support the demands of modern chatbot applications. With its unique architecture, Vald harnesses the power of a distributed vector network, allowing even distribution of data and workload across multiple nodes. This network ensures that your chatbot can handle queries with lightning-fast responses, making user interactions smooth and efficient.

Embracing a Distributed Vector Network

The backbone of Vald's resilience is its distributed vector network. Think of this as a vast web of interconnected computers (nodes), each holding a piece of the puzzle. When a user interacts with a chatbot powered by Vald, their query is quickly transformed into a vector—a set of numerical values representing the essence of the query. This vector is then compared against a huge library of other vectors already stored in the network. Because these vectors are spread out over the entire network, the system can perform parallel searches, significantly speeding up the process and providing accurate results in real time.

For example, if a customer asks a chatbot where they can buy a pair of sneakers, the chatbot will convert this question into a vector and scour through its network to find the most relevant response, directing the user to a product page or store locator.

Dynamic Auto Scaling for Seamless Performance

One of the challenges with traditional databases is handling fluctuating workloads without compromising performance. Vald addresses this through auto scaling, which automatically adjusts computing resources based on the current demand. During peak hours when many users are conversing with your chatbot, Vald scales up, allocating more nodes to manage the increased load. Conversely, during quieter periods, it scales down, reducing resources to minimize costs without affecting the user experience.

Auto scaling ensures that, whether your chatbot is assisting ten users or ten thousand, it remains swift and responsive. No manual intervention required—just set your parameters, and Vald takes care of the rest.

Ensuring Data Integrity with Effective Data Recovery

The nightmare scenario for any chatbot service is losing valuable data due to unexpected failures. Vald provides peace of mind with its robust data recovery mechanisms. Every piece of data within the network gets automatically backed up and replicated across different nodes. If one node encounters an issue, another immediately steps in with the backup data, ensuring seamless service continuity.

Moreover, Vald's intelligent recovery system continually checks for inconsistencies and errors, repairing them proactively. This means that even in the case of partial data loss or corruption, your chatbot's knowledge base remains intact, ever-ready to assist users.

Integrating Vald into a chatbot application not only enhances the service's resilience but also elevates the overall user experience. By combining a powerful distributed vector network with auto scaling capabilities and reliable data recovery, Vald solidifies itself as an excellent choice for businesses aiming to deliver superior chatbot services.

Pros and cons of integrating Vald with chatbots

Integrating Vald into your chatbot infrastructure can significantly enhance its capabilities, but it's essential to weigh the benefits against potential challenges. As a highly scalable vector database optimized for Kubernetes, Vald offers several advantages that make it attractive for developers looking to build or improve conversational AI systems.

Merits of Vald

One of the primary merits of using Vald is its scalability. Designed with cloud-native environments in mind, it's able to handle large volumes of data efficiently, making it ideal for chatbots that require access to extensive knowledge bases or datasets. Moreover, Vald's automatic backup and self-healing features ensure high availability and resilience, which are crucial for maintaining consistent chatbot performance even in the event of hardware failures or network issues.

Another significant advantage is its distributed vector network, which allows for high-speed searches and updates to the database. This feature translates into faster response times for chatbots, improving the user experience during interactions. Additionally, Vald supports multi-modal data searches, meaning that chatbots can process and understand various types of data (such as text, images, and audio), enhancing their ability to provide accurate and relevant responses.

Vald also provides auto-scaling capabilities based on the workload, thus optimizing resource utilization and potentially reducing operational costs. It manages resources dynamically, which is particularly useful during unexpected spikes in chatbot usage.

Drawbacks

While Vald presents numerous benefits, there are drawbacks to consider. The first is deployment complexity. Being a cloud-native system, Vald requires a Kubernetes environment to run, which might be a steep learning curve for organizations or developers new to Kubernetes. This added layer of complexity can lead to longer deployment times and may require additional expertise or training for your team.

Furthermore, the advanced features of Vald come with a need for consistent monitoring and management. To fully leverage its capabilities and ensure everything runs smoothly, you'll likely need dedicated staff who understand how to navigate and troubleshoot within a Kubernetes ecosystem.

Lastly, while Vald's auto-scaling is beneficial, it may result in variable costs that are hard to predict. Depending on the traffic and the number of resources used, expenses could fluctuate, making budgeting more challenging compared to solutions with fixed pricing models.

In summary, Vald is a powerful tool that can significantly boost the functionality of chatbot applications. However, the complexities associated with its deployment and maintenance must be carefully considered before integration.

Selecting the right vector database can make a significant difference in the performance and capabilities of chatbot applications. As we have explored, each database presents its unique set of features tailored to different aspects of chatbot functionality. Milvus stands out for its open-source nature and scalability, while Weaviate brings the power of GraphQL and automatic classification into play. Faiss offers high efficiency for large-scale datasets, Pinecone simplifies vector search with its managed service, and Vald provides a resilient cloud-native solution.

The landscape of AI-driven interactions continues to evolve, and the backbone of this revolution lies in databases that can process and understand complex, unstructured data. The vector databases discussed here are at the forefront of this technology, enabling more natural and intuitive chatbot conversations. By carefully considering the pros and cons of each, developers can harness these tools to create chatbot applications that are not only responsive but also deeply understanding of human language nuances.

As businesses continue to invest in AI and machine learning, the choice of a vector database could very well be the deciding factor in providing a superior user experience. It is clear that the future of human-computer interaction will rely heavily on the seamless integration of databases like these, pushing the boundaries of what is possible in automated communication. With the right vector database, chatbots have the potential to become more than just a tool; they can become a conversational partner capable of driving meaningful engagement.

Other articles

November 10th, 2023

Unleashing the Power of GPT Prompts for Effective Business Marketing

ces to optimize your content creation process. read more...

November 14th, 2023

An In-Depth guide to Processing CVS Files and Spreadsheets with GPT-4

torials, from setting up to troubleshooting. read more...

November 15th, 2023

Step-by-Step Guide to Creating a ChatGPT Plugin

nt and marketing your tool. read more...